Fundamentals Of Linear Regression

The Basics:

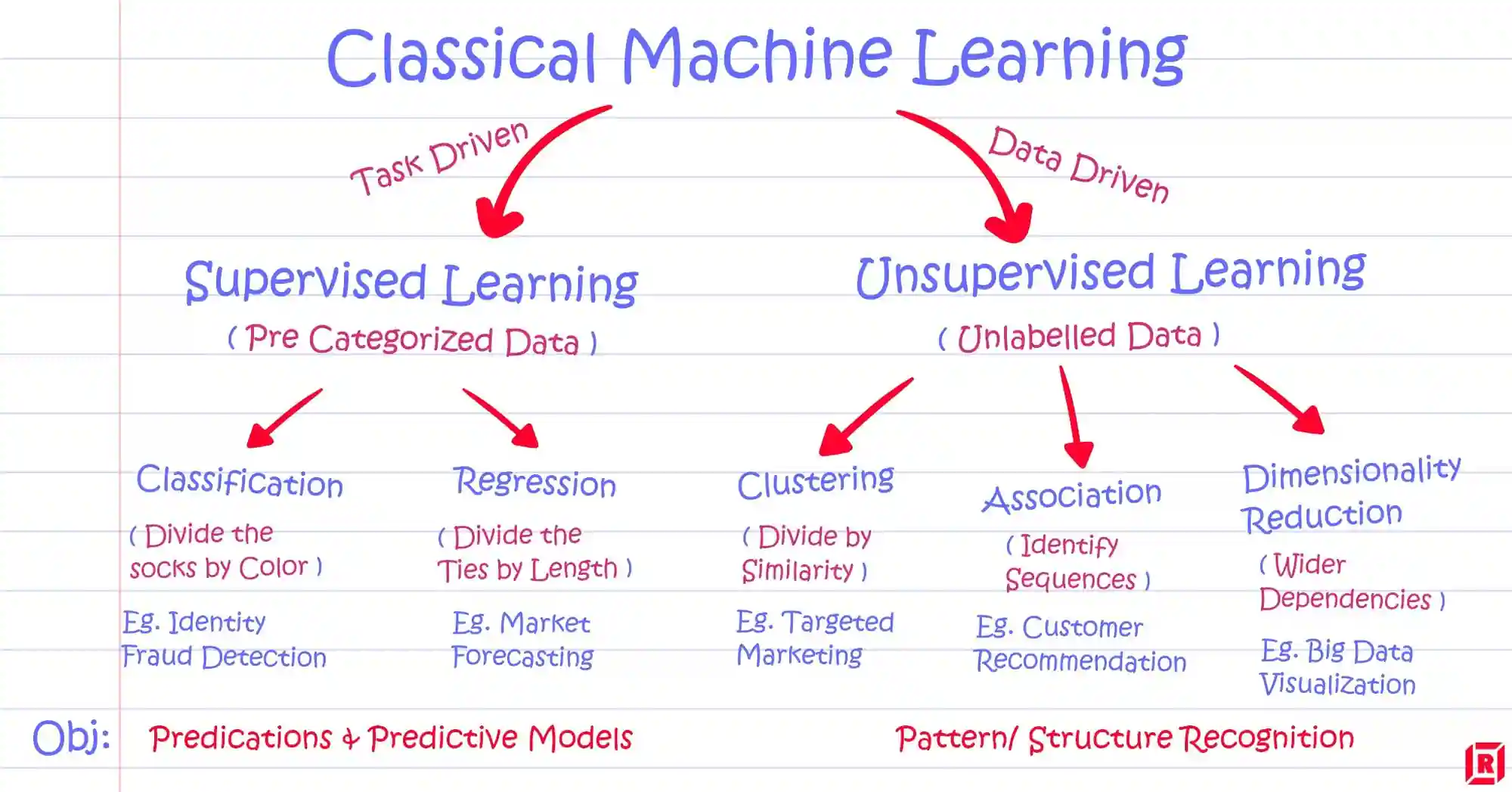

Machine Learing can be divided into two parts Supervised(Task Driven) and UnSupervised(Data Driven):

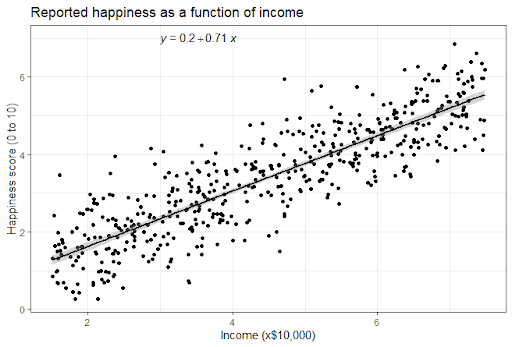

Now Let’s Start with Linear Regression:

Linear Regression

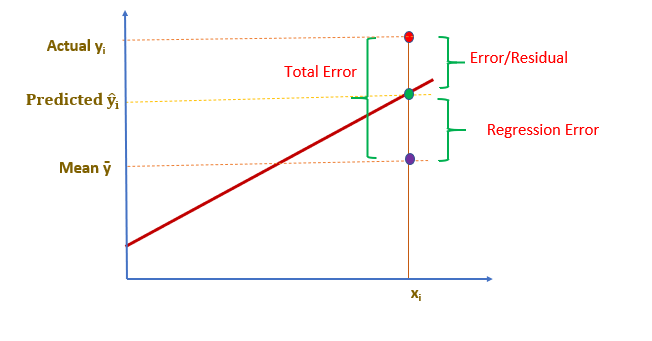

- Our Aim : To Find the Best fit line or get the minimal error

OKay Now what about the Maths Behind it???

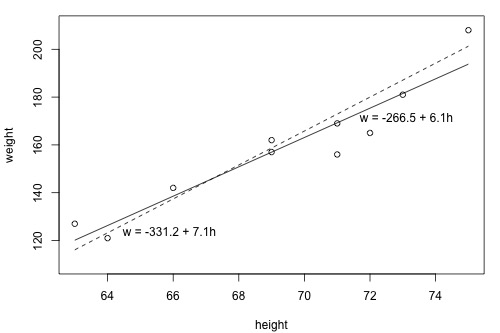

- Well it’s nothing but your very on favourithe formula of slope of a line $ y = mx + c $

- where m is the slope or coefficient

- and c is the intercept

- Now wee’ll also have a function of hypothesis let’s see the function:

- Now we have also seen the cost function and so our main goal would be to minimize that

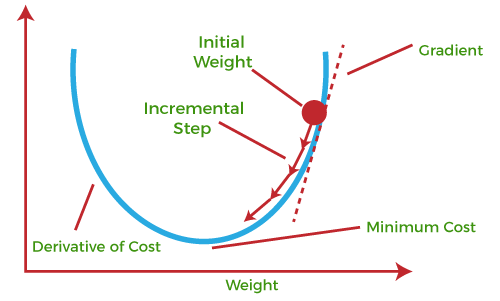

The Gradient Descent

- If we move towards a negative gradient or away from the gradient of the function at the current point, it will give the local minimum of that function.

- Whenever we move towards a positive gradient or towards the gradient of the function at the current point, we will get the local maximum of that function.

- The main objective of using a gradient descent algorithm is to minimize the cost function using iteration.

So the Outline will be